Unsupervised Deep Learning via Affinity Diffusion

Accepted by AAAI Conference on Artificial Intelligence (AAAI'20)

Queen Mary University of London

Description

Convolutional neural networks (CNNs) trained in a supervised fashion have significantly boosted the state-of-the-art performance in computer vision. Moreover, the feature representations of a supervised CNN (e.g. trained for classification on ImageNet) generalise to new tasks. Despite such remarkable success, this approach is limited due to a number of stringent assumptions that do not always hold valid. Due to the only need for access of unlabelled data typically available at scale, unsupervised deep learning provides a conceptually generic and scalable solution to these limitations.

One intuitive strategy for unsupervised deep learning is joint learning of feature representations and data clustering. This objective is extremely hard due to the numerous combinatorial configurations of unlabelled data alongside highly complex inter-class decision boundaries. To avoid clustering errors as well as the following propagation, instance learning is proposed whereby every single sample is treated as an independent class. However, this simplified supervision is often rather ambiguous particularly around class centres, therefore, leading to weak class discrimination. As an intermediate representation, tiny neighbourhoods are leveraged for preserving the advantages of both data clustering and instance learning. But this method is restricted by the small size of local neighbourhoods.

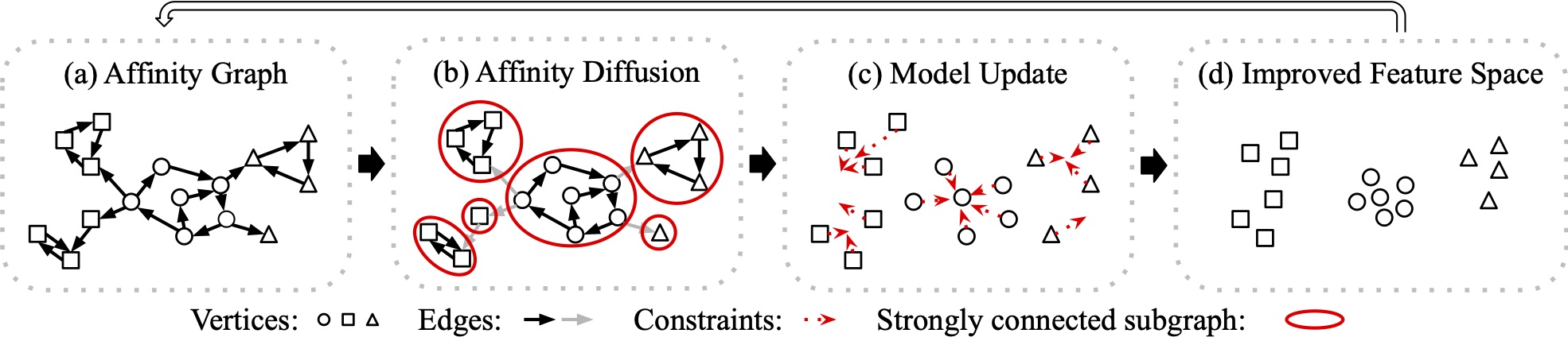

In this work, we aim to solve the algorithmic limitations of existing unsupervised deep learning methods. To that end, we propose a general-purpose Progressive Affinity Diffusion (PAD) method for training unsupervised models. Requiring no prior knowledge of class number, PAD performs model-matureness-adaptive data group inference in training for more reliably revealing the underlying sample-to-class memberships.

We make three contributions:

- We propose a novel idea of leveraging strongly connected subgraphs as a self-supervision structure for more reliable unsupervised deep learning.

- We formulate a Progressive Affinity Diffusion (PAD) method for modelmatureness-adaptive discovery of strongly connected subgraphs during training through affinity diffusion across adjacent neighbourhoods.

- We design a group structure aware objective loss formulation for more discriminative capitalising of strongly connected subgraphs in model representation learning.

Benchmarks

Extensive experiments are conducted on both image classification and cluster analysis using the following six datasets and the results show the advantages of our PAD method over a wide variety of existing state-of-the-art approaches.

- CIFAR10(/100): An image dataset with 50,000/10,000 train/test images from 10 (/100) object classes.Each class has 6,000 (/600) images with size \(32\!\times\!32\).

- SVHN: A Street View House Numbers dataset including 10 classes of digit images.

- ImageNet: A large 1,000 classes object dataset with 1.2 million images for training and 50,000 for test.

- MNIST: A hand-written digits dataset with 60,000/10,000 train/test images from 10 digit classes.

- STL10: An ImageNet adapted dataset containing 500/800 train/test samples from 10 classes as well as 100,000 unlabelled images from auxiliary unknown classes.

Please kindly refer to the paper for more details and feel free to reach me for any question.